Leo Mouta

My Bio

My name is Leo Mouta. I am currently a Master’s student at Carnegie Mellon University’s Robotics System Development program, class of 2025. Although currently a roboticist, my background is in aerospace engineering (MS - ISAE-SUPAERO, France; BS - Aeronautics Institute of Technology, Brazil). I also dabble in quantum computing (Minor Degree in Engineering Physics - Aeronautics Institute of Technology, Brazil) and cybersecurity.

Fields of Interest

My main interest is autonomous systems, particularly focusing in:

- Embedded systems

- Autonomy and planning

- Guidance and navigation

- Control theory

Other interests include cybersecurity, military history and literature. I also happen to know a vast collection of dad jokes and useless trivia.

Resume

Click here to download my resume.

CMU Capstone Project

The MRSD program at CMU includes a 3-semester capstone project, to be developed by student teams. I was part of team CreoClean, which used robotics to clean toxic creosote from factories. Sponsored by Koppers Inc. Link to the team’s website here.

Project video:

Videos

- This project demonstrates a real-time home alarm system. It includes a PIR motion sensor, keypad, and buzzer, running on a nrf52840 MCU/Cortex-M4 processor. Features include password hashing in kernel space, user-space separation, real-time task scheduling, and custom peripheral drivers. The system ensures secure authentication, motion-triggered alarms, and a stealthy panic mode that discreetly alerts authorities. All done in bare-metal C and ARM assembly!

- Using AirSim, Unreal Engine, and Cesium Ion, I was able to generate a photorealistic map of Pittsburgh (centered around CMU). Enjoy the view!

- For our robot autonomy class, my group and I used computer vision and path planning to develop code for a whiteboard-cleaning robot. The robot is able to erase certain colors while preserving others. Pretty entertaining video if you ask me :-)

- In this video, I programmed a quadcopter to autonomously intercept the Roomba robot, which was controlled by a human operator. The drone then had to follow the Roomba around, followed by an automatic landing at the base point. Interception was achieved by modeling the quadrotor’s behavior as an MDP:

- In this video, I simulated a prototype for an an autonomous differential drive robot using ROS and Gazebo. The program runs the ROS navigation stack on top of the simulated hardware, which enables the robot to move from one point to another while avoiding obstacles. Localization is made using adaptive Monte Carlo from the laser sensor input while path finding is based on A*. The environment map on the right was previously built using Fast SLAM:

- I developed this demonstration while working at the JPMorgan Chase & Co. AI Maker Space. My boss had asked me to do something nice with the drones for Christmas :-). In this video, the drones spiral along a common axis, changing their colors as a function of their position:

- This video shows an exercise developed as part of the computer vision course at Carnegie Mellon University. Taking two pictures of the same object from different views, it is possible to 3D reconstruct the object from its 2D pictures:

- This video shows a Kinova arm which was tasked with grasping an object through a hole. As such, this project involved identification, planning under constraints and controls. Developed while I was working at the JPMorgan Chase & Co. AI Maker Space @ CMU:

- Monocular human 3D pose detection and reconstruction using an OpenVINO demo running on an Intel Movidius Neural Compute Stick VPU. Developed while I was working at the JPMorgan Chase & Co. AI Maker Space @ CMU:

Technical Writings

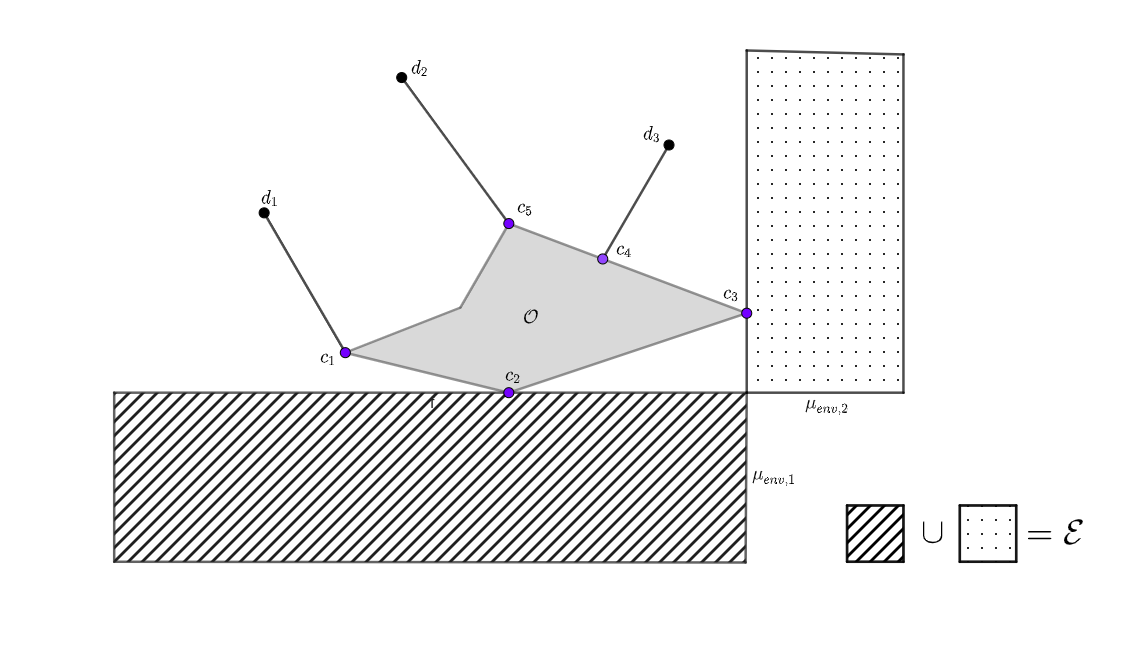

- Manipulating a cable-suspended object with multiple UAVs and environment contacts in 2D

Lecture Notes

- Introduction to public-key cryptography (Portuguese)

- Introduction to quantum computing (Portuguese)

Additional Content

- History research project (done at ISAE-SUPAERO): Comparison of Historical and Fictional Views Concerning the Battle of Borodino (1812)